|

👋 Zhexi Luo I am Zhexi Luo, an undergraduate in Computer Science at Sun Yat-sen University, where I conduct research at ISEE@SYSU. Currently, I am a research intern at LinS Lab, advised by Lin Shao. My research interests focus on robot learning. My research aims to integrate foundation models into robotic systems to enable general, dexterous, and robust manipulation, focusing on learning frameworks for reliable control in unstructured settings. If you have any ideas or thoughts related to my research, feel free to reach out! |

|

📰 News |

|

📚 Research |

|

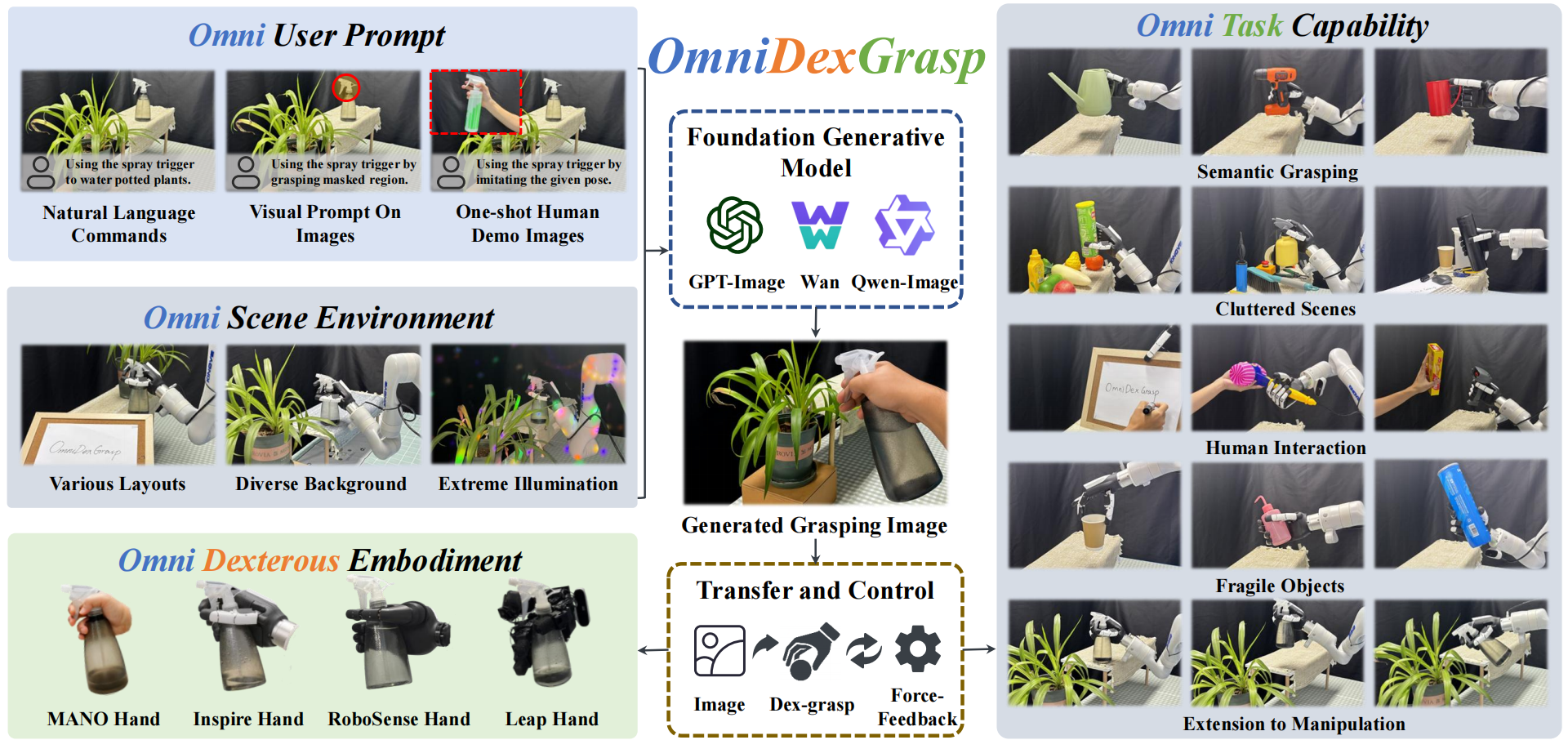

OmniDexGrasp: Generalizable Dexterous Grasping via Foundation Model and Force Feedback

Yi-Lin Wei*, Zhexi Luo*, Yuhao Lin, Mu Lin, Zhizhao Liang, Shuoyu Chen, Wei-Shi Zheng Accepted, IEEE International Conference on Robotics and Automation (ICRA), 2026 arXiv / project page / code A generalizable dexterous framework that leverages generative foundation models to achieve omni-capabilities across diverse user prompts, dexterous embodiments, and grasping tasks. |

|

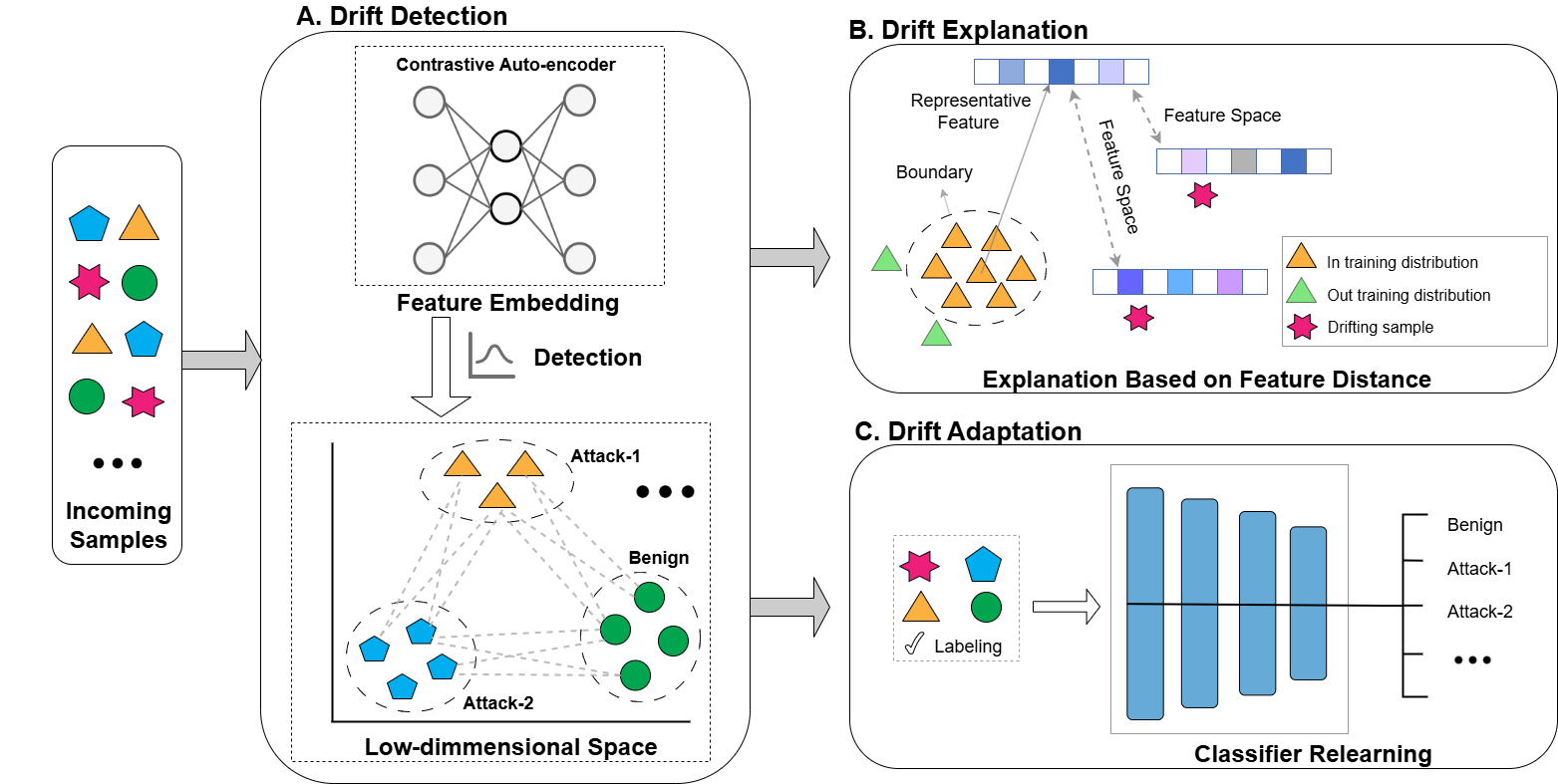

DriftTrace: Combating Concept Drift in Security Applications through Detection and Explanation

Yuedong Pan, Lixin Zhao, Tao Leng, Zhexi Luo, Lijun Cai, Aimin Yu, Dan Meng Accepted, IEEE Transactions on Information Forensics and Security (T-IFS), 2026 IEEE Xplore A unified framework that combines detecting, explaining, and adapting to out-of-training-distribution (OOD) data for improving model robustness in dynamic open-world environments. |

🔬 Project |

|

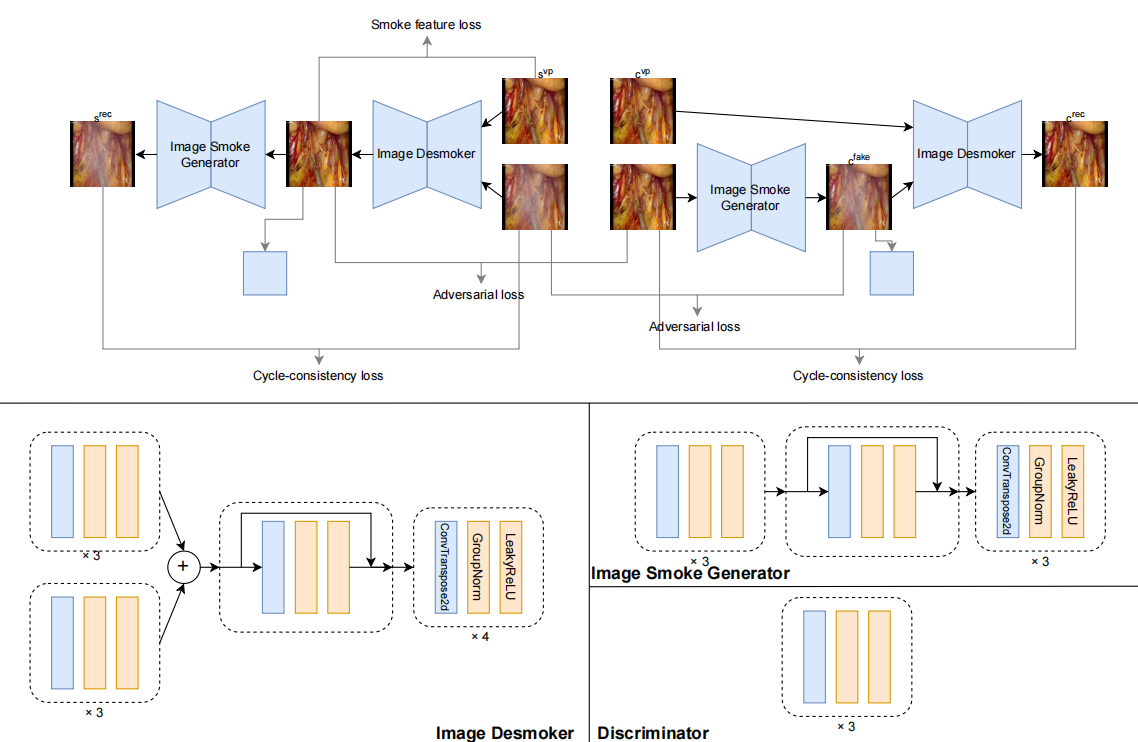

Smoke Removal in Laparoscopic Surgical Videos Using Temporal Smoke-Free Semantic Information

Developed a novel framework that integrates video prediction and image desmoking to address surgical smoke in laparoscopic videos. By leveraging temporal semantic information from smoke-free frames within a Cycle-GAN based architecture, the framework achieves real-time smoke removal and demonstrates superior performance over existing approaches, improving surgical visibility and safety. |

💼 Experience |

|

LinS Lab @ NUS Research Intern, advised by Lin Shao 2025.11 - Present |

|

Sun Yat-sen University Bachelor in Computer Science and Technology 2022 - Present |

🏆 Awards |

|